2025 Reflections.. 2026 Next Steps

From Dendrites to Digital: A 2025 Retrospective and a Look Ahead

As 2025 comes to a close, I find myself in a mood of deep reflection. This year marked a massive transition in my life: I retired from Southern Methodist University (SMU) after 31 years of teaching Computer Science. Looking back, I am filled with gratitude for my great colleagues, but especially for my students. They often thanked me for the knowledge I shared, but I’m not sure they realized that they gave back to me far more than I gave to them. For me, teaching and explaining concepts has always been my ultimate pathway to learning.

The Berkeley Shift: From Theory to Pitch Decks Retirement, however, didn't mean slowing down. I transitioned to teaching Generative AI classes at Berkeley, and I have been thoroughly enjoying this new environment. The energy here is distinct, particularly the intense focus on the start-up ecosystem.

I’ve had to learn new tricks. Beyond the algorithms, I am now learning the ins and outs of helping students craft pitch decks and layout ideas for starting their own companies. It is invigorating to be surrounded by that builder’s mindset.

Needless to say, following AI developments has been a dominant theme of my 2025—not just to update my syllabi, but to use these models as personal assistants and associates. I treat the AI like a brilliant graduate student or a junior colleague; it is someone I can brainstorm with, bounce ideas off of, and use to sharpen my own thinking.

Full Circle: The Neuroscience Connection Perhaps the aspect of 2025 I am most excited about is a personal intellectual renaissance. The rise of neural networks has compelled me to revisit my early work in Atlanta at Emory in the late 60s and early 70s.

Back then, I was deep in the world of biology. I dissected human brains in my Neuroanatomy class alongside med students. In the lab, I performed neurophysiology experiments, recording brain activity with implanted electrodes in the thalamus and reticular formation. I was fascinated by the machinery of the mind.

However, that love affair was eclipsed the day a Digital Equipment PDP-8 appeared in our lab. I was immediately smitten. I switched my focus from wetware to hardware, heading over to Georgia Tech to understand how software and hardware could come together to build early AI systems.

Cajal and The Library Now that AI has captured the world's attention, I’ve been pulling out my dusty books. I found myself reflecting on a 1-1 seminar I had the great fortune to take with Professor Jerome Sutin at Emory. I recall being "scared stiff" meeting with him each week. To my surprise, he didn't direct me to the latest research on the Hypothalamus (his specialty). Instead, he assigned me the works of Lorente de Nó and Santiago Ramón y Cajal.

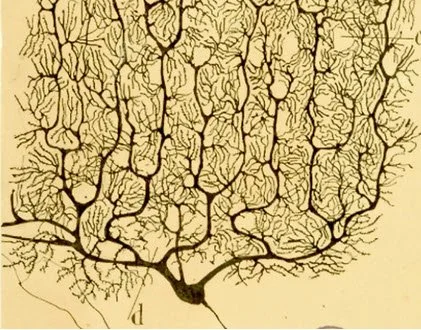

These weren't just dusty old papers. Santiago Ramón y Cajal, often called the father of modern neuroscience, was the first to rigorously document the neuron doctrine—the idea that the nervous system is made up of discrete individual cells rather than a continuous mesh. Using Golgi staining methods and his own incredible artistic talent, he mapped the intricate architecture of the brain's circuitry. Lorente de Nó, a student of Cajal, further advanced this by describing the columnar organization of the cortex, a concept that vaguely prefigures the layered architectures we see in deep learning today.

Santiago Ramón y Cajal’s Drawing of Pyramid Cells

This past year, wandering the wondrous stacks of the Berkeley Library, I tracked down a copy of The World of Ramón y Cajal. Seeing those drawings again took me right back to my days at Emory, bridging a 50-year gap between biological neurons and the artificial ones I teach today.

Advice for the AI Era: The Three Dimensions With AI making huge leaps in coding capabilities, many students—past and present—have asked me how to navigate their careers in this tumultuous atmosphere. My answer is to focus on three distinct dimensions:

1. Understand the Tech Ease up on the video games (I say this as someone who used to play Battlefield 2 for hours) and Netflix. Instead, digest everything 3Blue1Brown has to say about neural networks and transformers. Read the technical articles from Anthropic and Google. Use NotebookLM to organize and summarize.

But here is my "secret sauce" that I used heavily in 2025: Get a spiral notebook (one that you can bend back), a good pen (the Sarasa is my go-to implement), and WRITE and DRAW what you are learning. When you write by hand, you summarize. Your brain isn't just trying to hit the right keys; it is synthesizing reflections using "multi-modal input." Your visual system, your motor system, and your cortex are working to coordinate your transcriptions. That information and those images are being assimilated deep in your hippocampus in a way typing cannot replicate.

2. Understand the Players You need to know who is driving the ship. Understand the companies and the individuals vying for top dog status. Use YouTube to find interviews with the luminaries:

Andrej Karpathy (@karpathy): He produces detailed material and open teaching resources on building neural networks from scratch. He is essential for understanding modern deep learning systems.

Yann LeCun (@ylecun): The Chief AI Scientist at Meta. Follow him for cutting, often contrarian takes on representation learning and "world models."

Andrew Ng (@AndrewYNg): A perfect mix of pedagogy, MLOps, and applied LLM/vision. He remains one of the best high-signal "bridge" accounts moving from research to practice.

Lex Fridman (@lexfridman): His long-form conversations surface many of the above plus adjacent thinkers; it’s a great discovery mechanism for new people to follow.

3. Be Proactive and MAKE! In the 1990s, I had the good fortune to meet John Cage, the avant-garde composer and philosopher. I recently came across a quote attributed to him that I now incorporate in all my classes and advice to students:

"Nothing is a MISTAKE. There is no WIN. There is no LOSE. Only MAKE!"

Every week, design and implement a prototype project for yourself. Build a piece of software that might help you or someone else in their work. Use AI. Engage in "Vibe Coding"—see how well you can SPECIFY what you want the AI to build rather than just typing the syntax yourself. Try your hand with Agents.

As they say, "Just DO IT." Sure, you'll make mistakes and wander down blind alleys. But remember, learning what does NOT work is perhaps more valuable in the long run than getting it right the first time. And when you get stuck, remember you have a superstar at your disposal. Better Call Claude—or Gemini, or whomever. Prompt and you shall receive.

Looking Ahead to 2026 As I look toward 2026, the calendar is already filling up with exciting challenges:

I will continue developing specialized courses on AI and writing more articles.

I’m thrilled to start teaching an online GenAI class at the University of Bologna Business School in their "AI for Business" MS program.

On the social impact front, I will be teaching AI concepts in the Justice Through Code project through Columbia University. This program targets formerly incarcerated individuals, helping them restructure their lives through technology education.

A Request for You: As I map out my content for 2026, I want to know what you need. Fill out the form at : https://suggest-ai-video.streamlit.app

Let me know what topics, terms, or concepts you find confusing and I will try and address these in upcoming "Dr. C YouTube Shorts." If I pick your topic, I’ll be sure to give you a shout-out in the video!

2025 was a year of rediscovery and transition. I can’t wait to see what 2026 brings.